Today, I show how to cheat the halting problem and why Web3 of all industries popularized the underlying principles. We will look at how untrusted user code is sanitized in eBPF as used in the Linux kernel, in Bitcoin script, in EVM, and, of course, in Wasm.

This is part 4 of the Wasm in the Wild series, which now has a nice series overview page.

Introduction

In an ironic twist, WebAssembly and Web3 share the “Web” prefix for the wrong reasons.

WebAssembly (Wasm) is an assembly instruction format with an abstract machine designed to run anywhere, even inside the JS runtime of modern browsers. Hence, the first “Web” comes from the association with the browser.

The “Web” in Web3, however, comes from blockchain inventors’ desire to claim their technology will be the foundation of the next evolution of the World Wide Web. At least that is my take on the matter, seeing that Gavin Wood, co-founder of Ethereum and creator of Polkadot, coined the phrase Web3.

I believe both terms would be better off without the prefix. Despite its name, WebAssembly is not limited to Web applications. Likewise, Web3 is not a complete replacement for Web 2.0, regardless of what the name suggests.

Allow me a quick analogy. Web3 doesn’t replace the current Web any more than a new seasoning for your kitchen replaces your cooking style. Sure, you will try the new flavor. You might like it for some meals. But it won’t change most of your recipes.

I think the name Web3 is too grandiose. People would have more realistic expectations if the name were more humble.

Nevertheless, here I am talking about how Web3 and WebAssembly connect. It doesn’t involve the Web but instead a tale of trust. If you don’t want to trust the servers of big tech in Web 2.0, then you need a computing model that works in untrusted environments. WebAssembly turns out to be a contender for solving just that problem.

In line with my previous two posts, Why WebAssembly Came to the Browser and Why WebAssembly Came to the Backend, I want to start by explaining the broader context of Web3, including the competing technologies to Wasm in that space. Following that, the heart of the article contrasts technical details of how Wasm limits finite resources compared to other virtual machines in Web3. Finally, I conclude with a discussion on the general security aspects of Wasm in this space.

Feel free to jump to the sections most interesting to you!

Part 1: Understanding Web3

The Wasm in Web3 Landscape

Among the top 50 crypto tokens by market cap today, I found four ecosystems using a Wasm runtime in their nodes. Namely Polkadot, Near Protocol*, Internet Computer, and Cosmos. Several other projects provide the tooling to execute Wasm smart contracts, too, but as far as I can tell none of them actually include a Wasm runtime, hence they are not directly relevant to this article.

*Disclosure: I have been an active and paid contributor to Near Protocol for more than two years, and this is where most of my hands-on expertise on the topic comes from.

Smart contract blockchains could have used any existing language, really. Something like Java wouldn’t be too bad of a choice. Heck, they could even allow submitting Dockerfiles to the chain to download and execute pretty much anything on the chain. But using Wasm has its benefits.

The main reasons for Wasm are performance, portability, and security. Fast startup times are essential. It reduces the overhead per smart contract invocation and, hence, the cost charged to users. Furthermore, it keeps latency down, which is one of the pain points of interactive Web3 apps.

Then, there is the big issue of limiting the resource usage, measured in compute time and memory, that user programs can consume. As should become apparent in this article, it is not easy to have strict and deterministic limits on untrusted code without compromising on performance. I believe in 2024, Wasm has one of the cleanest solutions for this problem. But to really understand the problem and the iterative approach to solve it, we have to start with the motivation and history of Web3.

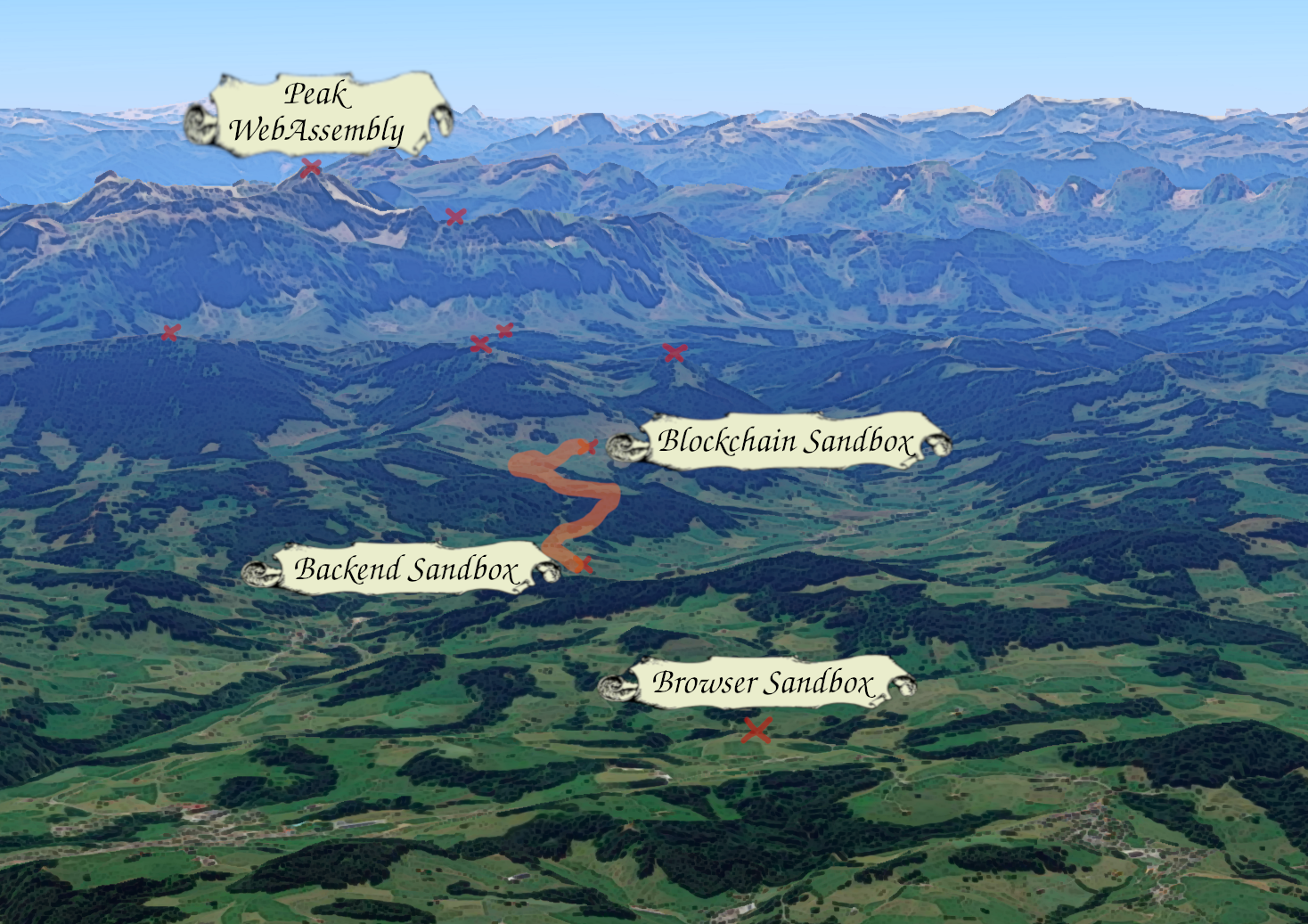

We turn around from the village we’ve rested in and walk up the next hill.

We turn around from the village we’ve rested in and walk up the next hill.

Web3: If you love Bitcoin, what could be better than Bitcoin?

It’s easy to get hung up on technical terms when discussing blockchains. But to understand the core innovation of Bitcoin, you don’t need a degree in computer science. The goal is to have money that is not controlled by a central entity. Not a state, not a bank, or any other kind of company or organization. But still, somehow, we need to keep a record of who owns how much of this money.

Now, how this is achieved is where it gets technical. If we have a central, trusted party with the last word on who owns what, then this is a centralized system. Powerful actors like government can influence such a system, or straight out seize it. More practically speaking, most people only trust a centralized system if it is backed by a government. That’s why your local supermarket accepts your local state-issued currency but not the brownie points you get from purchasing games on Steam.

Okay, in order to have state-independent money, central parties are forbidden. With that constraint, how can it be solved? With Bitcoin, it starts with a rule set of how the network should operate. Then many parties run code that follows these rules on their machines. They are the nodes of the Bitcoin network.

All those nodes replicate every Bitcoin transaction locally, and they constantly share a state hash with the rest of the network. If everyone has the same outcome, this is the global state, and all is good. If there are mismatches, there are two possibilities.

- Either different nodes applied different transactions. This is a common occurrence in Bitcoin.

- Some nodes didn’t apply the rules correctly. They could be malfunctioning or perhaps acting with malicious intent.

In either case, a complicated form of democratic voting follows to figure out the correct state. This works as long as a sufficiently large majority follows the rules correctly.

And with that, we have a global state machine that is only controllable by the majority. A democratic system if you will, except we count machines compute power rather than individual people to sum up votes. When people ask me what is so profound about it, I say it’s global and open like email but for money.

For those of us who believe in this principle idea behind Bitcoin, we can ask a natural follow-up question. If it works for financial transactions, why not make it more general?

At this point, the scientists are not too concerned about the question whether they should, it’s mainly about the question whether they could. And I need you, the reader, to accept this for now and just focus on the technical aspects of it, for I am a scientist and not a politician.

If Bitcoin represents the first state-independent Internet money, Web3 is the first globally shared general-purpose computer. Again, there should be no central entity controlling it, but we still want a globally shared state.

Ethereum is the first and most successful iteration of this. The idea is simple, take Bitcoin but allow executing small programs instead of only financial transactions.

If Bitcoin has transactions like “Send 1 BTC to Alice and record the new balances”, an Ethereum transaction is more like “Execute this Python script and persist all changes it makes”. Except, it’s not Python because that would be too easy. Instead, they invented a new byte code (EVM bytecode) and created a new programming language (Solidity).

Which path would you take?

Which path would you take?

Evolution of General-Purpose Code on Blockchains

The history of execution environments used on blockchains evolved roughly in these steps.

- (2009) Bitcoin Script with basic rules for sending money.

- (2015) The Ethereum Virtual Machine (EVM) brings Turing-completeness to blockchains.

- (~2020) More specialized virtual machines enter the race:

- The Solana Virtual Machine (SVM) allows parallel execution of contracts.

- Wasm VMs are used to ensure maximum compatibility with existing languages, like Rust.

- Facebook’s Libra project creates the Move language for a smart contract programming approach based on formal verification.

This is a vast simplification of the landscape, of course, but it should be sufficiently detailed to follow the rest of the article. Below are a few more details on these projects, before moving to the main part of the article.

Bitcoin Script

Bitcoin’s state machine is limited to recording financial transactions. Transactions are there to transfer Bitcoins and nothing else. However, even Bitcoin allows scripting to programmatically enhance the transfer of money.

Ownership of Bitcoin is always defined by a list of checks the sender must pass to spend it. If those checks are passed, one can spend the Bitcoins by defining the new checks the next spender of the same coins has to pass.

The most basic check requires proof of holding the private key for a certain address. By adding the same check multiple times with different addresses, the next spending transaction has to be signed by multiple private key holders.

Bitcoin scripts are executed on a stack machine that supports arithmetic operations, cryptographic functions, access to the block timestamp, and if-else branching. This enables the creation of rather sophisticated applications, like micropayment channels. But it’s not a general-purpose computing platform.

Smart Contracts

Technically, Bitcoin scripts were the first smart contracts. Just a set of rules on how money can be spent. But today, when people talk about smart contracts, they usually refer to programs on blockchains built specifically to have more advanced scripts on chain.

Of all the things BitcoinScript could do, it notably lacks loops. By adding loops, Ethereum and other smart-contract blockchains became Turing-complete. Additionally, they allow the reading and writing of arbitrary state, which means the blockchain now has not only universal code execution but also persistent memory to facilitate stateful applications. This makes blockchains equally capable as a (very slow) personal computer.

Executing a smart contract cannot be free, since the global state machine only has limited capacity, and the node operators also have to somehow pay their bills. Therefore, compute time and storage space on a blockchain are usually paid for in the chain’s currency or a derivative of it. For the remainder of the article, I will call this the gas cost, which is the most common name for it.

Several blockchains allow writing smart contracts in Rust, which is a good fit. Smart contracts are often highly optimized to reduce gas costs, so you want to have a systems language that gives you maximum control. But bugs in them can cost you millions, so you might not write them in C. Furthermore, Rust’s powerful macro system is a staple in blockchains to massively reduce the boilerplate code developers have to write to integrate with the specific ecosystem.

Here is a full example of a smart contract written in Rust for Near Protocol to give you a better idea.

use near_sdk::near;

// This struct is persisted on-chain and loaded on every execution.

// The default implementation is used for initialization.

#[near(contract_state)]

#[derive(Default)]

pub struct CookieClickerState {

cookies: u64,

}

// The #[near] macros automatically exports functions on the Wasm

// interface, with the calling conventions and data serialization

// format defined by the Near Wasm runtime.

#[near]

impl CookieClickerState {

// This method modifies state, hence borrows mutable self.

// Callers have to buy gas to make this call.

pub fn click_cookie(&mut self) {

self.val += 1;

}

// Read-only method borrows immutable self.

// Calling this method is free and requires no Near account.

pub fn num_cookies(&self) -> u64 {

self.cookies

}

}

The example program (smart contract) stores a single u64 on the blockchain,

wrapped in a CookieClickerState struct. You could build a frontend for it with

a giant cookie image that, when clicked, submits a transaction that calls

click_cookie. There are no permission checks in this program, so anyone can

call this function. That’s kind of the point of having this big shared Web3

computer. Anyone can interact with your code as long as they pay for the

computing costs by buying enough gas. Likewise, deploying the contract is open to

anyone who has the gas for it.

If you have read my previous post, maybe you remember how Wasm modules are now used to host simple applications on a cloud without the need to set up any infrastructure. Imagine this, but the hosting provider is this uncontrollable global state machine. This could be valuable if you care about censor resistance, for example.

Some people see this as a revolution in how applications are hosted, and some people think it’s just hot air. I’m in between. Let the technology prove what it can achieve. As I said already, I don’t believe it replaces the Web as we know it. However, I can see some parts of the World Wide Web being backed by blockchain technology with real-life advantages over other models.

Ultimately, though, this is a technical blog. Whether you think these platforms are valuable to humanity or not, there is a real industry behind them that has spent a lot of engineering time building the technology. And I’m here to talk about that engineering in exorbitant detail, so please keep reading if that’s up your alley.

We finished the ascent, now it’s mostly straight forward.

We finished the ascent, now it’s mostly straight forward.

Part 2: Technical Challenges of On-Chain Execution

In this second part, things will get more technical. I want readers to understand that despite theoretical proofs that it’s not possible to know how long a program will run, we can indeed limit a program’s execution time without relying on interrupts. And Web3 needs this because interrupts would be non-deterministic.

To fully understand the details, we will touch on some compiler engineering. But don’t worry, I will explain the necessary concepts as we move along.

Enforcing Finite Resource Limits

Why Resource Limits are Necessary

Smart contract blockchains executing a random subset of untrusted tiny programs at any moment. New code is submitted constantly and we can make no assumptions on whether the code is by a well-intentioned user, a troll, or an evil attacker.

With these constraints in mind, what are you doing with the following program?

loop { }

Someone could submit such code and the system must be able to handle it. But if you just execute it and run to completion, well, congratulation, your entire network is now hanging in this infinite loop.

I suppose you could just run it with a 1 ms timeout and let the execution fail with an error. But what if it’s instead this code?

for _ in (0..1_000_000) { }

Interrupting a system after a fixed amount of time is non-deterministic. Of the many nodes replicating the same work, some might be able to just finish the loop, while other nodes with weaker hardware run into the timeout. Now the nodes struggle to agree on the result, some will say the execution failed while others will say it succeeded.

The most common solution is to keep counting how many operations are executed and interrupt after a fixed number, making it deterministic across hardware. Although, in reality, blockchains convert from instructions to gas and interrupt after the purchased gas is all burnt. But the idea is the same, we just need the number of executed instructions to have a fixed limit upfront.

This is one problem. Another problem is memory limits. Specifically, stack usage can be tricky. Consider this code.

fn foo() {

foo();

}

An infinite recursion like this usually results in a stack overflow. This is because every invocation of a function pushes the current frame pointer and the return address to the stack, to facilitate the restoration of them when the called function returns. Thus, the stack height grows with the recursion depth.

Just how big the stack’s capacity is by default depends on your OS. This affects the maximum recursion depth before the stack overflow happens.

In the blockchain space, at the very least, you need to ensure the stack limit is standardized, or else there will be programs for which some clients crash with a stack overflow while others run it just fine.

Furthermore, you better make sure this is running in a sandboxed environment that triggers a stack overflow internally before the host runtime process itself exhausts its stack space. The user program may crash, but you don’t want the full client to crash.

Again, you could address this issue with explicit checks on every execution step. Then you simply stop the program when you notice the limits are surpassed. This is a correct way to handle it but absolutely tanks performance. Inserting several conditional branches between every single pair of instructions you execute is a sure path to create a largely unusable system.

Instead, we would like to know the resource requirements ahead of time and only start running a piece of code if it fits in the limits. If we are sure it fits, we don’t have to check. We just run it to completion. This is much more efficient.

Sadly, the more complex the executed language is, the harder it is to know the limits ahead of time. Indeed, it follows from the halting problem that no algorithm can figure out how many operations an arbitrary program will run for.

Luckily, we don’t have to solve the general halting problem, we just have to know if it finishes after a certain number of instructions. For some programs, this will be trivial to know. After all, the halting problem only states that there are particularly nasty programs for which we cannot know, it doesn’t say we cannot know for any program.

If we are smart about it, we can limit the input programs to a format that makes it possible to know the limit, and we can reject any programs for which we cannot figure it out. Bitcoin Script is on the most restrictive end of this, so let’s see how it escapes the halting problem.

Bitcoin Script

Bitcoin script is restricted by design to make execution trivial and help with restricted execution. In particular, there is no way of moving the instructor pointer backward. Instructions are executed down from the top, not going back, only potentially skipping some lines due to if/else statements. This means every instruction in the code is executed at most once.

Consequently, we can just look at the code size and derive a maximum number of instructions it could contain. This is also the maximum of instruction that an execution will visit.

Bitcoin clients therefore don’t have to worry about running any script for too long. They just check the size limit of a block and if that checks out, they run every script in it to completion without any sort of interrupt.

The stack size is even less of a problem for Bitcoin Script. For one, it does

not support function calls. Nor does it have a way to allocate X variables

in a single instruction. The best you can do to fill the stack is to use

OP_PUSHDATA4 to push a large constant on the stack. However, the entire constant

has to be included in the input script as well. Hence, the total stack usage is always

limited by the size of the input script. So, the Bitcoin solution is to limit

the size of transactions, and that gives the stack limit for free.

Strictly speaking, Bitcoin transactions are not individually limited in size. Only the total block size limit is enforced on the protocol level. But most Bitcoin clients won’t include individual transactions larger than 100 kB in the blocks they mine. They will, however, accept any size if other clients include larger transactions.

Which brings me to an important point about blockchains. Most clients implementing a sanity check is not a valid mechanism to prevent abuse. Limits have to be defined on the protocol level and enforced by any standard compliant client, or otherwise there will be someone going around it. And that’s how we get this magnificent 3.9 MB image stored on the Bitcoin blockchain.

This was achieved with OP_PUSHDATA2, and the 1803x1803 JPEG file dumped as a

binary constant right in the script, putting the entire thing right on the

stack when executing the transaction. As far as I can tell, this ~4MB is

the largest stack usage bitcoin script ever caused and you cannot make it

significantly larger.

EVM

Ethereum’s founders were not content with a simple language like Bitcoin script for their smart contracts. No way would they settle for something that’s not Turing-complete. And with that, the halting problem is back to bite us.

The EVM supports backward jumps, which makes loops possible. But unlike the

jump from x86, it’s not possible to jump to an arbitrary line of code. The EVM

only allows a jump to target an operation called JUMPDEST. This is a no-op

when executed but crucially marks what places JUMP and JUMPI are allowed to

target.

The explicit label markers for where a jump can go make it easy to split a program into what compiler engineers call basic blocks. Code inside a basic block has no control flow, which makes it easy to know the resource requirements ahead of time. If the block has 10 instructions inside, executing the block is just 10 instructions, simple as that.

For stack usage, we can go through all instructions in the block once and take note of the maximum stack usage for the block. If a block adds 16 bytes to the stack, then we know we can only execute this block if we have at least space for 16 more bytes.

Basic blocks are connected in a control-flow graph (CFG). An EVM can compute the CFG and insert limit checks at every transition between basic blocks. If the next basic block to be executed breaches the limit, execution can be stopped right there, and the transaction will be marked as failed.

In a way, the trick is to split the Turing-complete code into non-Turing-complete pieces and only do the expensive counting when going from one piece to another. That’s usually much better for performance than checking on every single instruction. But it still fulfills the requirements for deterministic stack and execution time limits.

If this went a bit too fast, don’t worry. I will go deeper into how this works for Wasm later, with some graphs for visualization. This was just a high-level idea.

eBPF, RBPF

While the Ethereum founders invented a new programming language and defined their own set of opcodes, other projects did not want to reinvent the wheel. For example, Solana programs use RBPF opcodes. Those are based on eBPF, which is best known for its use in the Linux kernel.

eBPF allows users to run code in the Linux kernel without root privileges. Sounds dangerous? Luckily, there are strict checks performed on the code before it gets executed.

The eBPF verifier in the kernel allows no pointer accesses that it can’t prove are safe, which is made possible with pre-defined address ranges and special treatment for certain kernel objects.

It also forbids unbounded loops. It can check that by constructing the CFG and rejecting programs with cycles in them. In order to pass that verifier with loops, you have to unroll your loops or use the provided helpers for loops with a guaranteed upper limit of iterations.

These checks make sense. You don’t want non-root users to be able to hang the kernel in an infinite loop or corrupt its memory.

It’s pretty impressive what checks the eBPF verifier can do these days without limiting expressiveness too much. Check out the concepts section in eBPF docs if you want to dig deeper.

The same checks naturally would be super helpful for blockchain engineers looking for a way to run code on-chain. However, it can also be challenging for smart contract developers to create programs that pass the strict eBPF verifier.

Solana opted for RBPF, a user-space VM written in Rust that executes eBPF instructions but lacks the thorough verification steps of the in-kernel VM. So, it uses the same opcodes but didn’t copy the powerful verifier. They did, however, implement their own verifications to a similar effect.

I couldn’t find a good description of what exactly is being checked in Solana’s case. But what I could gather from reading the source code of Solana’s fork of RBPF, it can detect certain cases of infinite loops. But unlike the kernel, they also use dynamic instruction counters to limit total work with the purchased gas, so in the end it seems very similar to what Ethereum does.

Writing eBPF programs, for the kernel or for Solana, is possible in many languages, thanks to the LLVM integration of eBPF. For the kernel, eBPF programs are usually written in C using bpf-helpers. But for Solana, the programming language of choice is Rust. That’s why Solana’s ecosystem contributes a large piece to the Web3 Rust job listings.

By using eBPF and Rust, a bytecode format already established in the Linux kernel and the most-loved language of the decade, it seems Solana really picked up existing tech rather than falling victim to the not-invented-here syndrome. But we can take compatibility with existing tools one step further by using Wasm.

Destination locked in.

Destination locked in.

Wasm

With the fast startup time of Wasm runtimes and a bytecode format designed for cheap static analysis, Wasm is perfectly suited for use in blockchains. I’ve written a bit about Wasm static analysis in earlier posts, but let’s take a deeper dive today.

Structured Control Flow and Basic Blocks

First, Wasm only has structured control flow. In other words, there are no

goto ADDRESS or other ways to express a jump instruction. This severely limits the

possible jump targets.

In Wasm, control flow is only altered by function calls,

loop, if/else, or an explicit br (break).

This means that if you write out a Wasm program in the typical WAT syntax with

round brackets and proper indentation, you are almost done with constructing the

basic blocks

of the code! Only function calls need an extra step, but that is trivial, too.

Let’s look at an example. Take a loop with an increasing number and a print.

pub fn foo() {

for i in 0..1000 {

print_num(i);

}

print("done");

}

This compiles to the following Wasm.

func $foo (type 2)

(local i32)

i32.const 0

local.set 0

loop

local.get 0

call $print_num

local.get 0

i32.const 1

i32.add

local.tee 0

i32.const 1000

i32.ne

br_if 0

end

i32.const 1048576 (; pointer to "done" ;)

i32.const 4 (; string slice length ;)

call $print

Constructing basic blocks based on indentation produces a first CFG.

This isn’t quite right for basic blocks, which shouldn’t contain any control flow. Function calls should be split, too. Let’s add that in.

Now, we have a bunch of atomic blocks. If we start executing one, it will be executed to the end, independent of the program state. Therefore, we can statically determine the cost of each basic block of code.

To connect it back to what we learned previously, we can treat each basic block like a non-Turing-complete Bitcoin Script, for which we can always figure out an upper limit on time and stack height before even executing it.

But we don’t have to do a rough estimate based on just the number of instructions. We can afford to iterate the code once and look at each instruction type included. This allows to find the stack layout for every line of Wasm code, as well as sum up a specific instruction cost.

For the stack height, we can use the WebAssembly stack machine static analysis I described in my first post in the series Fundamentals of WebAssembly. Applied to our sample code, we get the following stack structure per line of code.

| before | after | |

|---|---|---|

| i32.const 0 | [] | [i32] |

| local.set 0 | [i32] | [] |

| loop | [] | [] |

| local.get 0 | [] | [i32] |

| call $print_num | [i32] | [] |

| local.get 0 | [] | [i32] |

| i32.const 1 | [i32] | [i32,i32] |

| i32.add | [i32,i32] | [i32] |

| local.tee 0 | [i32] | [i32] |

| i32.const 1000 | [i32] | [i32,i32] |

| i32.ne | [i32,i32] | [i32] |

| br_if 0 | [i32] | [] |

| end | [] | [] |

| i32.const 1048576 | [] | [i32] |

| i32.const 4 | [i32] | [i32,i32] |

| call $print | [i32,i32] | [] |

You may see that the largest the WebAssembly stack grows is to [i32,i32], which

requires 8 bytes. Hence, we account for 8 bytes on the stack.

This was the Wasm stack cost, which is probably stored on the native stack but doesn’t necessarily have to be, depending on the runtime implementation. But I will assume here that it’s just placed one-to-one on the native stack by the runtime.

On top of that, the runtime should also account for the native stack costs, like stack pointer and return pointer. But the specific of that depend on what the native architecture is, so I will not include it here. But if you are actually building this, better make sure you do.

We can now augment the graph with the necessary gas and stack checks. I will

assume every operation costs one gas, except for things like loop or end, which

are already removed from the graph anyway. Other than that, just count the number

of instructions in a basic block, and you get the amount of gas it should cost to

enter the block.

Note the “frame + 1” thing at the start. This is to pay for setting up

(local i32). If it was (local i32 i32 i32) instead, we would account

for three units. This isn’t an actual operation to execute. Hence, it is listed

separately. But the runtime may have to do work proportional to the number of

local variables when setting up the function frame. So, if you are doing gas

accounting in your Wasm runtime and you are being serious about it, you should

account some gas for that, too.

Beyond that, you can see that gas is accounted for every basic block, while the stack height is only added once at the start of the function and subtracted once at the end. This is possible because the stack height is always fixed per line of code, no matter how many times the same line is visited due to loops.

Accounting only once per function means that we pay for the most expensive possible path in the function in terms of stack height, even if we don’t take it. But this granularity is usually good enough. I would do the same for gas if I could. Unfortunately, for gas, we have to count loop iterations.

Not shown in this graph, inside the print functions, there would be stack accounting, too. This is important, we cannot know, in general, the actual highest stack the call will need. We only know it within the realm of the current stack frame. As soon as we make another call, this requires another dynamic check for gas and stack height.

Okay, we have done the static analysis. All that’s left is to go from this graph to actually modified code with the checks inserted.

Inserting Accounting Logic

Once you know how much gas and stack height to charge for each transition between basic blocks, the next question is how to charge it. One option is to insert extra Wasm instructions between basic blocks. In this approach, we transform the untrusted input Wasm to a trusted Wasm with some extra instructions in it. We call this output instrumented code.

func $foo (type 2)

(local i32)

block

i64.const 8

i64.const 1

call $wasm_stack

i64.const 2

call $wasm_gas

i32.const 0

local.set 0

loop

i64.const 2

call $wasm_gas

local.get 0

call $print_num

i64.const 7

call $wasm_gas

local.get 0

i32.const 1

i32.add

local.tee 0

i32.const 1000

i32.ne

br_if 0

i64.const 3

call $wasm_gas

end

i32.const 1048576 (; pointer to "done" ;)

i32.const 4 (; string slice length ;)

call $print

end

i64.const 8

i64.const 1

call $wasm_unstack

In the code above, we call functions wasm_stack and wasm_gas. These need to

be provided as imported functions and perform the necessary checks. But you

could also provide it through another Wasm module, using the component model,

and do the accounting in a different Wasm module. That would make it completely

Wasm runtime agnostic.

Now, you can take this instrumented code and run it in any Wasm runtime. Even if they have no internal concept of gas accounting, as long as they implement the Wasm standard, they will deterministically produce the exact same result on all machines and are limited to whatever count of instructions and stack height you choose.

But maybe this is not efficient enough for you. Maybe you don’t want extra Wasm instructions and function calls just to do the accounting. What you can do instead is add the instrumentation right in the compile-to-native phase of the runtime, using a technique you might know under the name of intrinsics. Intrinsics are built-in functions that the compiler replaces with something on its own, rather than provided code.

Again, we start with parsing and verifying, while also computing the basic blocks and gas/stack info. Then, instead of transforming the Wasm, we emit, for example, x86 instructions directly. In that case, we can optimize to the point where the gas and stack height values are always kept in the same native CPU registers and checks are inlined.

The result of executing this x86 will be equivalent to the Wasm transformation approach. At the cost of tying it to the runtime’s compiler, it is now pretty cheap to account for gas and stack limits.

Near Protocol more or less implements what I just described. Nagisa designed and open-sourced the specs for it in finite-wasm as an extension to the general Wasm spec. Using this crate, you can define the gas and stack-height weights you find appropriate for your runtime, and then the library will do the Wasm-to-Wasm transformation for you.

For optimized use in production, Near Protocol also uses finite-wasm to do the same static analysis. Then, a custom singlepass compiler, originally forked from wasmer’s singlepass compiler, directly emits the x86_64 instructions. The instrumented executable is then stored in a cache for fast access if the same program is invoked again.

The result is a rather small overhead for dynamic gas accounting when the program is actually executed. And all the while, the Wasm code is only parsed a constant number of times. That constant could, in theory, be 1, hence single-pass compiler. But in practical terms, it’s nicer to make it 2 or 3 to separate verification from instrumentation. Still, the verification + instrumentation + compilation pipeline runs in linear time as a function of the input code size, which I found quite impressive when I learned about it.

To close this section, going full circle, a similar algorithm exists for EVM, too. I couldn’t find a similar general-purpose library for it, nor is there an EVM to EVM pass for a runtime agnostic solution. But in the evmone GitHub repo, they describe the same basic ideas as far as they apply to EVMs.

That’s all about the finite resource limits. Let’s take a step back and close the discussion of Wasm in Web3 with a higher-level discussion of sandboxing properties Wasm grants and whether or not that is suitable for the blockchain space.

Main goal achieved. Time to stretch our legs before going to sleep.

Main goal achieved. Time to stretch our legs before going to sleep.

Security Beyond Resource Limits

Is the Wasm Sandbox Strong Enough for Web3?

With all this talk about strict resource limits the Wasm runtime needs to enforce to be viable for Web3 applications, shouldn’t we also talk about the sandboxing properties in general? Surely, with Web3 code moving around lots of money, it has strong privacy requirements and must be protected against side-channel attacks and so on, right?

Well, not really. In the case of blockchains, everything is executed in public anyway, with all inputs publicly visible in the transaction. There is no private information of users to protect inside the runtime. Hence, it would not really matter if different smart contracts could somehow access the data of other smart contracts.

That’s for guest data inside the Wasm runtime. On the host machine, however, there are important secrets to protect. As the name crypto-currency suggests, all important messages are cryptographically signed and verified. And the private key for signing has to be stored somewhere accessible to the node.

Now, at Near Protocol, the clients are running the Wasm runtime code in the same process as the signing code. It might be in different threads, but crucially, as far as the CPU is concerned, the guest Wasm code running shares the same address space as the host with its private keys. If the guest can somehow escape the sandbox and have general host memory access, then they could potentially find those private keys.

In a way, that’s similar to how the kernel handles eBPF. It relies on the static analysis to be bug-free, which then guarantees that there are no sandbox escapes.

In the case of the kernel, a breach would be a classical root privilege escalation, with all the bad stuff this implies. But an attacker still needs to somehow execute code on your system to exploit it.

For Near Protocol, a sandbox escape would mean someone could submit a smart contract and instantly infect all nodes in the network. In other words, it would potentially leak all private keys of all validator nodes in the network.

But hey, just don’t write any bugs in your verifier, and you are good. No pressure!

Okay, but what are the alternatives? Putting each smart contract execution into a separate process that doesn’t even have access to signing keys could give better security, of course. And it’s not that hard to do. So why isn’t it done?

Well, mostly for performance. Starting a new process every time would add

non-trivial latency. Today, Near Protocol allocates only 780 μs for starting a

smart contract. Linux can easily take longer than a millisecond for a

fork() + exec() call. So the cost of this extra layer of security-in-depth

is pretty high.

I guess one could have a pool of reusable processes, or even just use a single process running just the Wasm runtime that receives the code to run over inter-process communication channels. That would add less overhead but it still wouldn’t be free.

But beyond just the performance consideration, someone escaping the sandbox in a separate process could still communicate to the rest of system in unforseen ways and probably get it to sign arbitrary stuff that way. I would almost argue that putting it in a separate process can give a false sense of safety. A sandbox escape may be fatal either way and if you are writing such code for Web3 you better know it.

Instead, the Wasm runtime in Near Protocol mostly relies on the code being simple and implementing the Wasm security model 100% correct. This should be enough to prevent sandbox escapes, with every memory access being checked. Plus, there is this trick that since Wasm uses 32-bit addresses, it can only directly address 4 GiB of memory. This means we can reserve a region of 4 GiB virtual address space after the start of Wasm memory. The Wasm program has physically no way of even specifying a memory address outside of that, so whatever it tries to read, even if it sneaks past the memory boundary checks, it wouldn’t be able to read anything outside this buffer zone.

And yeah, it would be remiss to not mention that standard practices for running user-defined code are also being applied. Like setting the correct memory map flags for read/write/executable data regions. But this is getting outside the Wasm specific discussion.

Is this perfect security? Of course not, nothing ever is. Sandboxes have been escaped many times. Wasm is not magically invulnerable to programmer mistakes. If you need an example of a Wasm runtime escape in V8, take a look at “A Deep Dive into V8 Sandbox Escape Technique Used in In-The-Wild Exploit” by Theori Vulnerability Research.

But personally, I think the security guarantees are good enough in the case of Near Protocol. We should keep in mind that the Wasm runtime in Near Protocol uses a very simple single-pass compiler, no Just-In-Time compilation, and battle-tested Wasm code validation libraries.

Keeping it simple like that, the amount of code with potential vulnerabilities is relatively small. Isolation layers could help, but it also increases the amount of code that is responsible for isolating properties. I would rather have a small piece of hardened code that several people in the team know really well and where everybody is on edge to prevent bugs in it at all costs instead of security-in-depth with a false sense of security. This way, the team can set up continuous fuzzing and employ extra rounds of code reviews for the critical code. At least that’s the way it’s happening today.

But what do you think? Is it crazy to run untrusted code in the same process as important signing keys are stored? How would you set up such a system? Let me know in the comments on r/rust.

Thanks for reading to the end. I hope you learned a thing or two about how Rust is used in the blockchain space and specific techniques to limit resource usage of user-defined code. Corrections and fixes are welcome on the GitHub repository for this blog.